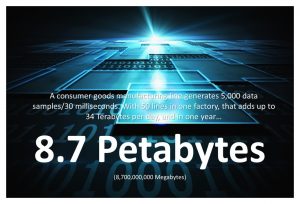

The amount of data being produced and captured from the plant floor today is staggering. When you add it all up, a factory can easily generate over 34 Terabytes of data per day, or nearly 9 Petabytes a year - that's 9 Million Gigabytes! Yet, with all of those systems, and all of that data:

- Equipment still fails

- Scrap is still produced

- Safety incidents still occur

- Schedules get missed

There have been enormous investments in automation, robotics, supervisory controls, quality control, and execution systems to list just a few. The machines have been computerized and safety systems installed. The data from those computers are captured by SCADA systems, graphically represented, trended, alarmed, and stored into a data repository (typically a data historian). Quality control systems like SPC have been applied to these processes yet defects make their way into customers’ hands. Manufacturing operations have been digitally represented from raw materials to work in process to finished goods. Manufacturing Execution Systems (MES) take the orders from the business system, apply finite scheduling algorithms, dispatch the work orders, track material to product and process specification, manages geology - yet, we still produce poor quality product. Manufacturing Intelligence (MI) tells us how we are doing executing the process. It tells us how our equipment operates (OEE), the quality produced (yield), and how products were made - we still produce poor quality product. So there must be things about manufacturing processes we don’t know.

We have extracted all we “know” about these manufacturing processes. How do we find out what we don’t know? - ANALYTICS!

Industrial Analytics

Analytics, or more precisely industrial analytics, is the next phase for productivity improvement in manufacturing. We have all the data, in fact more data than humanly possible to understand. Industrial big data has a lot of information locked within it - unseen correlations that lead to quality, safety, and equipment issues. Analytics will allow us to discover these correlations and go beyond knowing what happened, but why it happened. Once the question of why (diagnostics) something happened, we can start to work on how to manage it and mitigate its affect going forward.

Analytics will buy time for the manufacturers. Once failure mode has been diagnosed, a predictive model can be built and deployed into live streaming machine and process data. These models have the ability to recognize problem patterns in the data that will alert personnel well in advance, giving manufacturers critical time to proactively deal with the situation and mitigate, if not eliminate, the affects all together. Here are a couple examples:

Asset Availability – Manufacturers rely on their assets (machines/equipment) performing at their engineered capacity, be available when planned, and produce high-quality product. One of the major problems is “un-planned” downtime. Think of your car and how you maintain it. You schedule and plan for an oil change when it's convenient to you, maybe take care of a recall at the same time - this is planned downtime, no big deal. Unplanned downtime is when your car breaks down in the middle of rush hour. The time, cost and inconvenience is far greater than that oil change. In a manufacturing environment, this can easily amount to millions of dollars in missed production schedules and overtime. Most equipment has control limits to prevent the equipment from damaging itself and others around it. These limits, for example temperature, are typically set to extreme operating conditions, like the hottest day in summer and the coldest day in winter. But lost of bad stuff can happen between those limits. Analytics can model the temperature, and any other correlated data points, and provide an alert, often days or weeks in advance, that the temperature and other variables are trending toward a possible issue, even failure. This gives the maintenance staff ample time to plan a convenient time to address the situation before unplanned downtime occurs.

Process understanding – Production processes are designed to follow a standardized flow, so variation in that process is the root of all evil. Variation can lead to incidence and excursions around product quality, machine efficiency, and safety. In many cases the cause of these variations are unknown correlations within the machine and process data. Advanced analytics can determine each sensor’s (data source)contribution to the variation. Once this is understood, additional control algorithms or processes can be put in place to better control and not only stabilize a product process but optimize it as well.

Manufacturing has invested in a number of technologies to measure and control their operations. Yet issues with quality, equipment availability, and safety are still commonplace. Additional insight can be gleaned from this data, providing a path to improve in all these areas through advanced and predictive analytics. What's your story? Do you have horror story of data left untapped? Or keep it positive with a success story of finding insights from your data.